It’s been over a year since Facebook, Twitter, and YouTube banned an array of domestic extremist networks, including QAnon, boogaloo, and Oath Keepers, that had flourished on their platforms leading up to the January 6, 2021, Capitol riot. Around the same time, these companies also banned President Donald Trump, who was accused of amplifying these groups and their calls for violence.

So did the “Great Deplatforming” work? There is growing evidence that deplatforming these groups did limit their presence and influence online, though it’s still hard to determine exactly how it has impacted their offline activities and membership.

While extremist groups have dispersed to alternative platforms like Telegram, Parler, and Gab, they have had a harder time growing their online numbers at the same rate as when they were on the more mainstream social media apps, several researchers who study extremism told Recode. Although the overall effects of deplatforming are far-reaching and difficult to measure in full, several academic studies about the phenomenon over the past few years, as well as data compiled by media intelligence firm Zignal Labs for Recode, support some of these experts’ observations.

“The broad reach of these groups has really diminished,” said Rebekah Tromble, director of the Institute for Data, Democracy, and Politics at George Washington University. “Yes, they still operate on alternative platforms … but in the first layer of assessment that we might do, it’s the mainstream platforms that matter most.” That’s because extremists can reach more people on these popular platforms; in addition to recruiting new members, they can influence mainstream discussions and narratives in a way they can’t on more niche alternative platforms.

The scale at which Facebook and Twitter deplatformed domestic extremist groups — although criticized by some as being reactive and coming too late — was sweeping.

Twitter took down some 70,000 accounts associated with QAnon in January 2021, and since then the company says it has taken down an additional 100,000.

A man wearing a QAnon T-shirt waits in line for a rally featuring former President Donald Trump to begin in Perry, Georgia, on September 25, 2021.

Sean Rayford/Getty Images

Facebook says that since expanding its policy against dangerous organizations in 2020 to include militia groups and QAnon, it has banned some 54,900 Facebook profiles and 20,600 groups related to militarized groups, and 50,300 Facebook profiles and 11,300 groups related to QAnon.

Even since these bans and policy changes, some extremism on mainstream social media remains undetected, particularly in private Facebook Groups and on private Twitter accounts. As recently as early January, Facebook’s recommendation algorithm was still promoting to some users militia content by groups such as the Three Percenters — whose members have been charged with conspiracy in the Capitol riot — according to a report by DC watchdog group the Tech Transparency Project. The report is just one example of how major social media platforms still regularly fail to find and remove overtly extremist content. Facebook said it has since taken down nine out of 10 groups listed in that report.

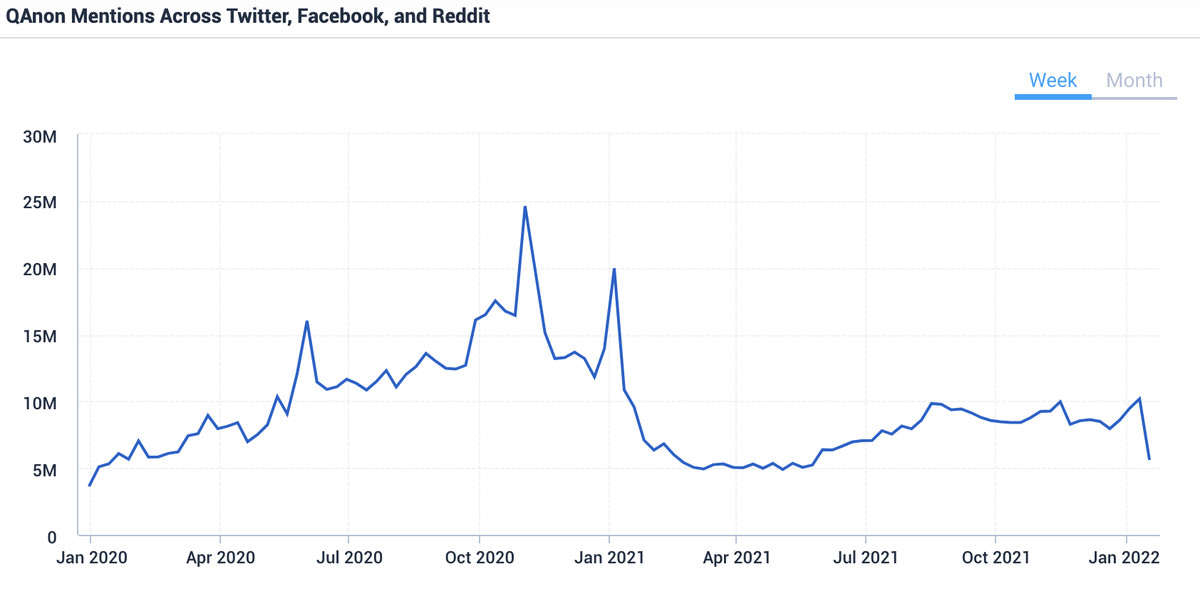

Data from Zignal Labs shows that after major social media networks banned most QAnon groups, mentions of popular keywords associated with it decreased. The volume of QAnon and related mentions dropped by 30 percent year over year across Twitter, Facebook, and Reddit in 2021. Specifically, mentions of popular catchphrases like “the great awakening,” “Q Army,” and “WWG1WGA,” decreased respectively by 46 percent, 66 percent, and 88 percent.

This data suggests that deplatforming QAnon may have worked to reduce conversations by people who use such rallying catchphrases. However, even if the actual organizing and dialogue from these groups has gone down, people (and the media) are still talking about many extremist groups with more frequency — in QAnon’s case, around 279 percent more in 2021 than 2020.

Mentions of keywords associated with QAnon across major social media networks dropped sharply after Facebook and Twitter bans in late 2020.

Zignal Labs

Several academic studies in the past few years have also quantitatively measured the impact of major social media networks like Twitter, Reddit, and YouTube deplatforming accounts for posting violent, hateful, or abusive content. Some of these studies have found that deplatforming was effective as a short-term solution in reducing the reach and influence of offensive accounts, though some studies found increases in toxic behavior these users exhibited on alternative platforms.

Another reason why some US domestic extremist groups have lost much of their online reach may be because of Trump’s own deplatforming, as the former president was the focus of communities like QAnon and Proud Boys. Trump himself has struggled to regain the audience he once had; he shut down his blog not long after he announced it in 2021, and he has delayed launching the alternative social media network he said he was building.

At the same time, some of the studies also found that users who migrated to other platforms often became more radicalized in their new communities. Followers who exhibited more toxic behavior moved to alternative platforms like 4Chan and Gab, which have laxer rules against harmful speech than major social media networks do.

Deplatforming is one of the strongest and most controversial tools social media companies can wield in minimizing the threat of antidemocratic violence. Understanding the effects and limitations of deplatforming is critical as the 2022 elections approach, since they will inevitably prompt controversial and harmful political speech online, and will further test social media companies and their content policies.

Deplatforming doesn’t stop extremists from organizing in the shadows

The main reason deplatforming can be effective in diminishing the influence of extremist groups is simple: scale.

Nearly 3 billion people use Facebook, 2 billion people use YouTube, and 400 million people use Twitter.

But not nearly as many people use the alternative social media platforms that domestic extremists have turned to after the Great Deplatforming. Parler says it has 16 million registered users. Gettr says it has 4 million. Telegram, which has a large international base, had some 500 million monthly active users as of last year, but far fewer — less than 10 percent — of its users are from the US.

“When you start getting into these more obscure platforms, your reach is automatically limited as far as building a popular movement,” said Jared Holt, a resident fellow at the Atlantic Council’s digital forensic research lab who recently published a report about how domestic extremists have adapted their online strategies after the January 6, 2021, Capitol riot.

Several academic papers in the past few years have aimed to quantify the loss in influence of popular accounts after they were banned. In some ways, it’s not surprising that these influencers declined after they were booted from the platforms that gave them incredible reach and promotion in the first place. But these studies show just how hard it is for extremist influencers to hold onto that power — at least on major social media networks — if they’re deplatformed.

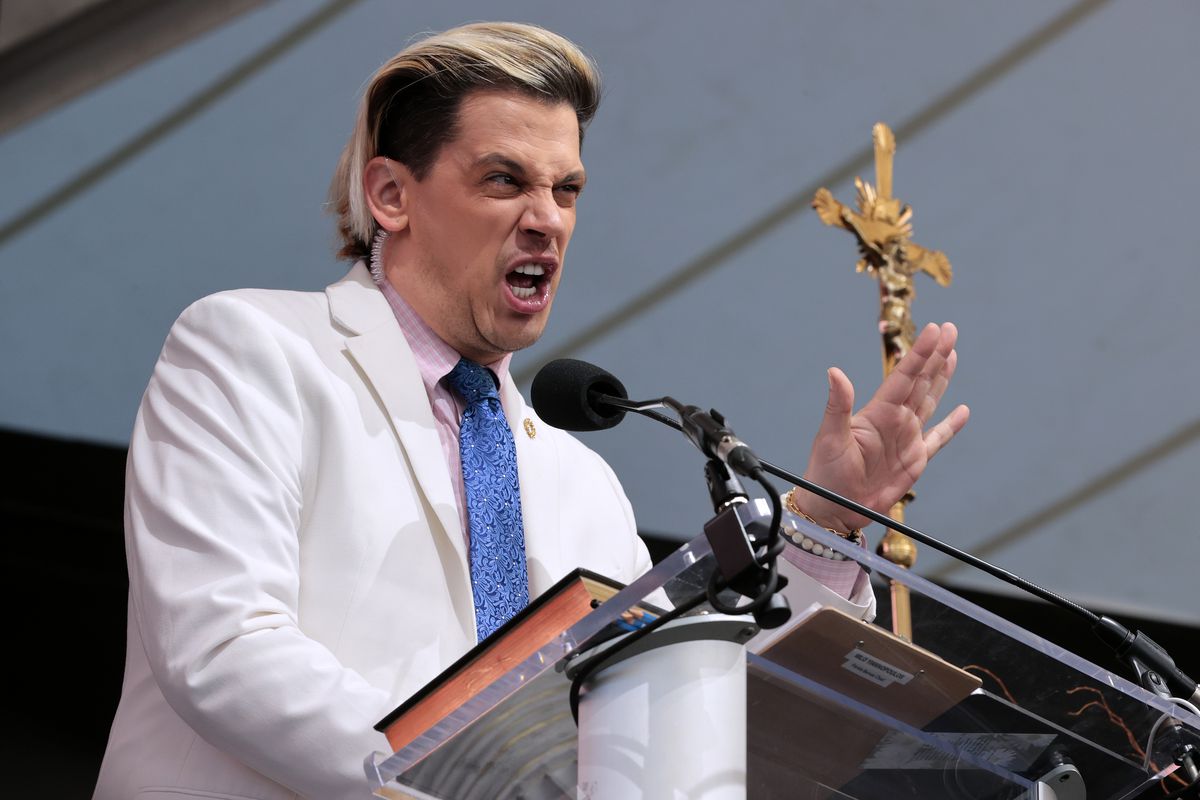

Far-right political pundit Milo Yiannopoulos hosts the “Bishops Enough Is Enough” rally in Baltimore, Maryland, on November 16, 2021. The far-right Catholic news outlet Saint Michael’s Media, also known as Church Militant, organized the conservative prayer meeting and convention.

Chip Somodevilla/Getty Images

Elijah Nouvelage/Getty Images

One study looked at what happened when Twitter banned extremist alt-right influencers Alex Jones, Milo Yiannopoulos, and Owen Benjamin. Jones was banned from Twitter in 2018 for what the company found to be “abusive behavior,” Yiannopolous was banned in 2016 for harassing Ghostbusters actress Leslie Jones, and Benjamin lost access in 2018 for harassing a Parkland shooting survivor. The study, which examined posts referencing these influencers in the six months after their bans, found that references dropped by an average of nearly 92 percent on the platforms they were banned from.

The study also found that the influencers’ followers who remained on Twitter exhibited a modest but statistically significant drop of about 6 percent in the “toxicity” levels of their subsequent tweets, according to an industry standard called Perspective API. It defines a toxic comment as “a rude, disrespectful, or unreasonable comment that is likely to make you leave a discussion.”

Researchers also found that after Twitter banned influencers, users also talked less about popular ideologies promoted by those influencers. For example, Jones was one of the leading propagators of the false conspiracy theory that the Sandy Hook school shooting was staged. Researchers ran a regression model to measure if mentions of Sandy Hook dropped due to Jones’s ban, and found it decreased by an estimated 16 percent over the course of six months since his ban.

“Many of the most offensive ideas that these influencers were propagating reduced in their prevalence after the deplatforming. So that’s good news,” said Shagun Jhaver, a professor of library and information science at Rutgers University who co-authored the study.

Another study from 2020 looked at the effects of Reddit banning the subreddit r/The_Donald, a popular forum for Trump supporters that was shut down in 2020 after moderators failed to control anti-Semitism, misogyny, and other hateful content being shared. Also banned was the subreddit r/incels, an “involuntary celibate” community that was shut down in 2017 for hosting violent content. The study found that the bans significantly reduced the overall number of active users, newcomers, and posts on the new platforms that those followers moved to, such as 4Chan and Gab. These users also posted with less frequency on average on the new platform.

But the study also found that for the subset of users who did move to fringe platforms, their “toxicity” levels — those negative social behaviors such as incivility, harassment, trolling, and cyberbullying — increased on average.

In particular, the study found evidence that users in the r/The_Donald community who migrated to the alternative website — thedonald.win — became more toxic, negative, and hostile when talking about their “objects of fixation,” such as Democrats and leftists.

The study supports the idea that there is an inherent trade-off with deplatforming extremism: You might reduce the size of the extremist communities, but possibly at the expense of making the remaining members of those communities even more extreme.

“We know that deplatforming works, but we have to accept that there’s no silver bullet,” said Cassie Miller, a senior research analyst at the Southern Poverty Law Center who studies extremist domestic movements. “Tech companies and government are going to have to continually adapt.”

A member of the Proud Boys makes an “okay” sign with his hand to symbolize “white power” as he gathers with others in front of the Oregon State Capitol in Salem during a far-right rally on January 8.

Mathieu Lewis-Rolland/AFP via Getty Images

All of the six extremist researchers Recode spoke with said that they’re worried about the more insular, localized, and radical organizing happening on fringe networks.

“We’ve had our eyes so much on national-level actions and organizing that we’re losing sight of the really dangerous activities that are being organized more quietly on these sites at the state and local level,” Tromble told Recode.

Some of this alarming organizing is still happening on Facebook, but it’s often flying under the radar in private Facebook Groups, which can be harder for researchers and the public to detect.

Meta — the parent company of Facebook — told Recode that the increased enforcement and strength of its policies cracking down on extremists have been effective in reducing the overall volume of violent and hateful speech on its platform.

“This is an adversarial space and we know that our work to protect our platforms and the people who use them from these threats never ends. However, we believe that our work has helped to make it harder for harmful groups to organize on our platforms,” said David Tessler, a public policy manager at Facebook.

Facebook also said that, according to its own research, when the company made disruptions that targeted hate groups and organizations, there was a short-term backlash among some audience members. The backlash eventually faded, resulting in an overall reduction of hateful content. Facebook declined to share a copy of its research, which it says is ongoing, with Recode.

Twitter declined to comment on any impact it has seen around content regarding the extremist groups QAnon, Proud Boys, or boogaloos since their suspensions from its platform, but shared the following statement: “We continue to enforce the Twitter Rules, prioritizing [taking down] content that has the potential to lead to real-world harm.”

Will the rules of deplatforming apply equally to everyone?

In the past several years, extremist ideology and conspiracy theories have increasingly penetrated mainstream US politics. At least 36 candidates running for Congress in 2022 believe in QAnon, the majority of Republicans say they believe in the false conspiracy theory that the 2020 election was stolen from Trump, and one in four Americans says violence against the government is sometimes justified. The ongoing test for social media companies will be whether they’ve learned lessons from dealing with the extremist movements that spread on their platforms, and if they will effectively enforce their rules, even when dealing with politically powerful figures.

While Twitter and Facebook were long hesitant to moderate Trump’s accounts, they decided to ban him after he refused to concede his loss in the election, then used social media to egg on the violent protesters at the US Capitol. (In Facebook’s case, the ban is only until 2023.) Meanwhile, there are plenty of other major figures in conservative politics and the Republican Party who are active on social media and continue to propagate extremist conspiracy theories.

Rep. Marjorie Taylor Green wears a mask reading “CENSORED” at the US Capitol on January 13, 2021.

Stefani Reynolds/Getty Images

For example, even some members of Congress, like Rep. Marjorie Taylor Greene (R-GA), have used their Twitter and Facebook accounts to broadcast extremist ideologies, like the “Great Replacement” white nationalist theory, falsely asserting that there is a “Zionist” plot to replace people of European ancestry with other minorities in the West.

In January, Twitter banned Greene’s personal account after she repeatedly broke its content policies by sharing misinformation about Covid-19. But she continues to have an active presence on her work Twitter account and on Facebook.

Choosing to ban groups like the Proud Boys or QAnon seemed to be a more straightforward choice for social media companies; banning an elected official is more complicated. Lawmakers have regulatory power, and conservatives have long claimed that social media networks like Facebook and Twitter are biased against them, even though these platforms often promote conservative figures and speech.

“As more mainstream figures are saying the types of things that normally extremists were the ones saying online, that’s where the weak spot is, because a platform like Facebook doesn’t want to be in the business of moderating ideology,” Holt told Recode. “Mainstream platforms are getting better at enforcing against extremism, but they have not figured out the solution entirely.”